HPC Specification

UAHPC

The University of Alabama High-Performance Computer (UAHPC) is a dynamically configured cluster comprising 81 nodes, consisting of Dell PowerEdge M620s, M630s, M640s, C6420s, R700s, R800s, and R900s models. This heterogeneous setup combines a total of 3408 CPU cores and 10 GPUs, delivering a combined single-precision computational power of 342.88 TFLOPS. Additionally, it houses 624.00 GB of GPU memory. This adaptable infrastructure offers substantial computing capabilities, enabling diverse research applications within the University of Alabama’s academic community.

Node CPU Specs:

This table summarizes the CPU hardware specification for the nodes:

| NodeName | CPUTot | ThreadsPerCore | CoresPerSocket | Sockets | Model name | CPU MHz | CPU max MHz | Partition | CPU TFLOPS |

|---|---|---|---|---|---|---|---|---|---|

| compute-0-0 | 40 | 1 | 10 | 4 | Intel(R) Xeon(R) CPU E7-8891 v4 @ 2.80GHz | 1697.718 | 3500.0 | threaded | 4.48 |

| compute-0-1 | 40 | 1 | 10 | 4 | Intel(R) Xeon(R) CPU E7-8891 v4 @ 2.80GHz | 1598.625 | 3500.0 | threaded | 4.48 |

| compute-0-2 | 40 | 1 | 10 | 4 | Intel(R) Xeon(R) CPU E7-8891 v4 @ 2.80GHz | 1595.015 | 3500.0 | threaded | 4.48 |

| compute-0-3 | 40 | 1 | 10 | 4 | Intel(R) Xeon(R) CPU E7-8891 v4 @ 2.80GHz | 1596.875 | 3500.0 | threaded | 4.48 |

| compute-1-0 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,long | 2.00 |

| compute-1-1 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,long | 2.00 |

| compute-1-2 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,long | 2.00 |

| compute-1-3 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,long | 2.00 |

| compute-1-4 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,long | 2.00 |

| compute-1-5 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,long | 2.00 |

| compute-1-6 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,long | 2.00 |

| compute-1-7 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,long | 2.00 |

| compute-1-8 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,ultrahigh | 2.00 |

| compute-1-9 | 36 | 1 | 18 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-1-10 | 36 | 1 | 18 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-1-11 | 36 | 1 | 18 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-2-0 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,highmem | 2.00 |

| compute-2-1 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,highmem | 2.00 |

| compute-2-2 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,highmem | 2.00 |

| compute-2-3 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,highmem | 2.00 |

| compute-2-4 | 20 | 1 | 10 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,highmem | 2.00 |

| compute-2-5 | 20 | 1 | 10 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,highmem | 2.00 |

| compute-4-12 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-4-13 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-5-0 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-5-1 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-5-2 | 20 | 1 | 10 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-5-3 | 20 | 1 | 10 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-5-4 | 20 | 1 | 10 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-5-5 | 20 | 1 | 10 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-5-6 | 20 | 1 | 10 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners,highmem | 2.00 |

| compute-5-7 | 48 | 1 | 24 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-5-8 | 48 | 1 | 24 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-5-9 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-5-10 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-5-11 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-14-0 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-14-1 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-14-2 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-14-3 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-14-4 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-14-5 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-16-0 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-16-1 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-16-2 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-16-3 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-16-4 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-16-5 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-17-0 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-17-1 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-17-2 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-17-3 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-17-4 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-17-5 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-17-6 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-17-7 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-17-8 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-17-9 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-17-10 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-17-11 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| compute-20-0 | 48 | 1 | 12 | 4 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,ultrahigh | 2.00 |

| compute-20-1 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-20-2 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-20-3 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mcubes | 2.00 |

| compute-20-4 | 88 | 1 | 22 | 4 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,ultrahigh | 2.00 |

| compute-20-7 | 56 | 1 | 28 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mh1 | 2.00 |

| compute-20-8 | 48 | 1 | 24 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,twater | 2.00 |

| compute-20-9 | 128 | 1 | 64 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mh1 | 2.00 |

| compute-20-10 | 128 | 1 | 64 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,mh1 | 2.00 |

| compute-20-11 | 48 | 1 | 24 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,ultrahigh | 2.00 |

| compute-20-12 | 48 | 1 | 24 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,ultrahigh | 2.00 |

| compute-20-13 | 64 | 1 | 32 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,ultrahigh | 2.00 |

| compute-20-14 | 16 | 1 | 8 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,ultrahigh | 2.00 |

| compute-20-15 | 48 | 1 | 24 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,highmem | 2.00 |

| compute-20-16 | 48 | 1 | 24 | 2 | Intel(R) Xeon(R) Gold 6126 CPU @ 2.60GHz | 2600.000 | 2600.0 | main,owners | 2.00 |

| gpu-0-0 | 24 | 1 | 12 | 2 | Intel(R) Xeon(R) Silver 4116 CPU @ 2.10GHz | 2100.000 | 2100.0 | gpu,gpu_mewes | 1.61 |

| gpu-0-1 | 64 | 1 | 64 | 1 | Intel(R) Xeon(R) Silver 4116 CPU @ 2.10GHz | 2100.000 | 2100.0 | mcubes,gpu | 1.61 |

| gpu-0-2 | 128 | 1 | 64 | 2 | Intel(R) Xeon(R) Silver 4116 CPU @ 2.10GHz | 2100.000 | 2100.0 | mh1,gpu | 1.61 |

| gpu-0-3 | 128 | 1 | 64 | 2 | Intel(R) Xeon(R) Silver 4116 CPU @ 2.10GHz | 2100.000 | 2100.0 | mh1,gpu | 1.61 |

| gpu-0-4 | 128 | 1 | 64 | 2 | Intel(R) Xeon(R) Silver 4116 CPU @ 2.10GHz | 2100.000 | 2100.0 | twater,gpu | 1.61 |

| gpu-0-5 | 64 | 1 | 32 | 2 | Intel(R) Xeon(R) Silver 4116 CPU @ 2.10GHz | 2100.000 | 2100.0 | gpu,mlee91 | 1.61 |

Node GPU Specs:

| NodeName | Partition | Gres | GPU TFLOPS | GPU Deep Learning TFLOPS | GPU Memory (GB) | GPU CUDA Cores | GPU Tensor Cores | GPU Half Precision TFLOPS |

|---|---|---|---|---|---|---|---|---|

| gpu-0-0 | gpu,gpu_mewes | v100:1,v100-32:1 | 28.7 | 224.0 | 48.0 | 10240.0 | 1280.0 | 250.0 |

| gpu-0-1 | mcubes,gpu | t4:1 | 8.1 | 0.0 | 16.0 | 2560.0 | 320.0 | 65.0 |

| gpu-0-2 | mh1,gpu | a100-80:1 | 19.5 | 156.0 | 80.0 | 6912.0 | 432.0 | 19.5 |

| gpu-0-3 | mh1,gpu | a100-80:1 | 19.5 | 156.0 | 80.0 | 6912.0 | 432.0 | 19.5 |

| gpu-0-4 | twater,gpu | a100-80:1 | 19.5 | 156.0 | 80.0 | 6912.0 | 432.0 | 19.5 |

| gpu-0-5 | gpu,mlee91 | a100-80:4 | 78.0 | 624.0 | 320.0 | 27648.0 | 1728.0 | 78.0 |

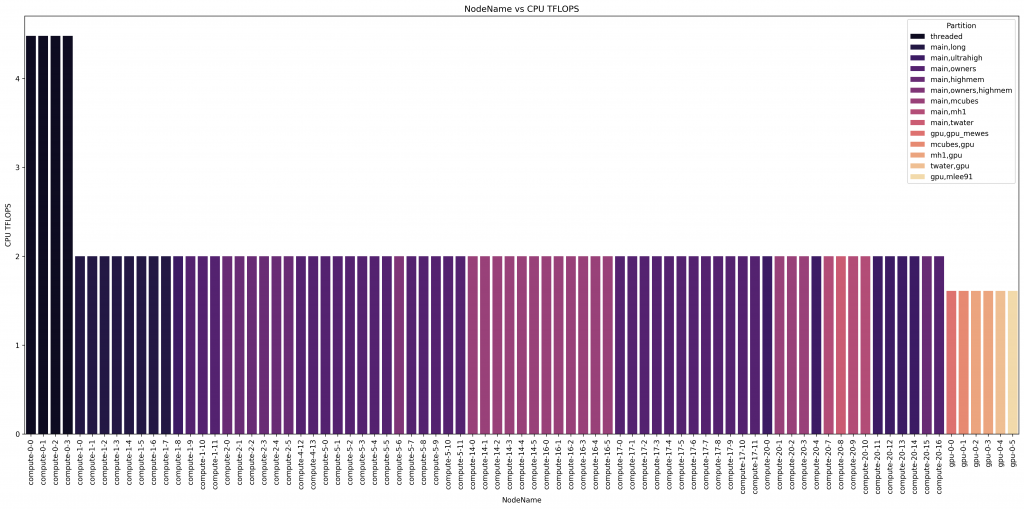

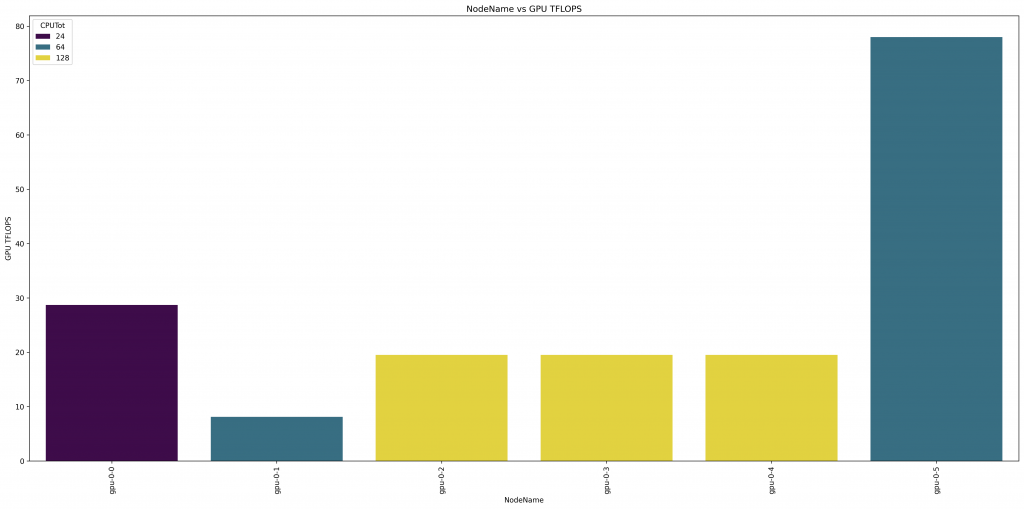

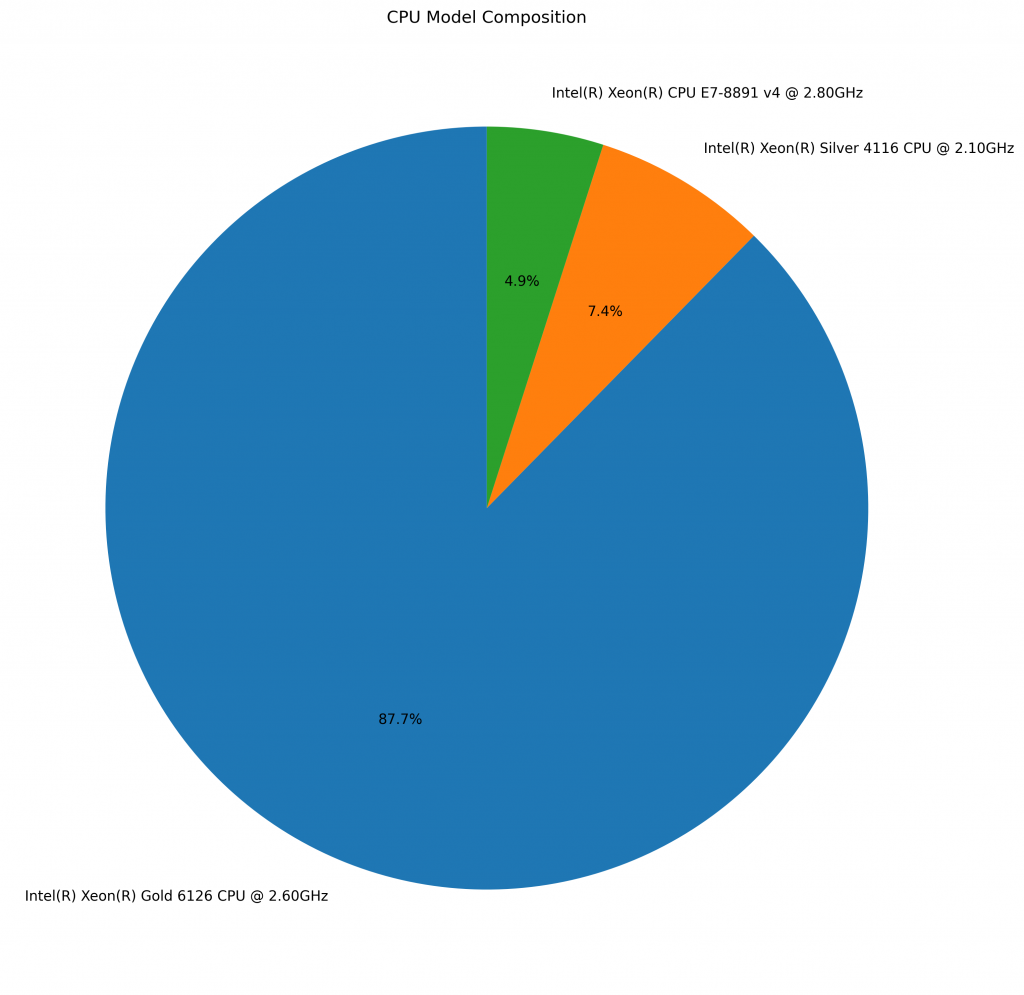

UAHPC Summary Plots

These visualizations present a summarized view of the compute resources within the UAHPC cluster:

Node vs CPU TFLOPS:

This graphical representation offers insights into the theoretical CPU compute performance of individual nodes:

Node vs GPU:

This graphical representation offers insights into the theoretical GPU compute performance of individual nodes:

CPU Composition:

This graphical representation offers insights into the CPU compositions of individual nodes:

UAHPC QOS Specifications:

QoS (rows) / Parition (columns) Limits on UAHPC

| QoS/Partition | Main | Long | Owners | Highmem | Ultrahigh | Threaded | GPU |

|---|---|---|---|---|---|---|---|

| Main | 24 hr / job 1500 jobs / group |

X | 24 hr / job 1500 jobs / group |

X | X | X | X |

| Long | 168 hr / job 309 cores / group |

168 hr / job 309 cores / group |

X | X | X | X | X |

| Debug | 15 min / job 25 jobs / user |

X | 15 min / job 25 jobs / user |

15 min / job 25 jobs / user |

15 min / job 25 jobs / user |

X | X |

| Owners | X | X | a | a | a | X | X |

| Threaded | X | X | X | X | X | 48 hr / job 80 core/group 16 core/job 48 threads/job |

X |

| GPU | X | X | X | X | X | X | 24 hr |

a CPU limits are dependent on user purchased hardware. Unlimited time. Preempts other jobs using long and main qos.

CHPC

The CHPC cluster comprises 31 nodes, housing 1920 CPU cores and 5 GPUs, incorporating Dell PowerEdge R6525s and R7525s nodes and boasting a theoretical sustained single precision performance exceeding 170.69 TFLOPs.

Node CPU Specs:

This table summarizes the CPU hardware specification for the nodes:

| NodeName | CPUTot | ThreadsPerCore | CoresPerSocket | Sockets | Model name | CPU MHz | CPU max MHz | Partition | CPU TFLOPS |

|---|---|---|---|---|---|---|---|---|---|

| chpc-compute-12-3 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-4 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-5 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-6 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-7 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-8 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-9 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-10 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-11 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-12 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-13 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-14 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-15 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-16 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-17 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-18 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-19 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-20 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-21 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-22 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-23 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-compute-12-24 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.446 | 2395.446 | main | 2.45 |

| chpc-gpu-10-0 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.454 | 2395.454 | gpu | 2.45 |

| chpc-gpu-10-1 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.454 | 2395.454 | gpu | 2.45 |

| chpc-gpu-10-2 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.454 | 2395.454 | gpu | 2.45 |

| chpc-gpu-12-0 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.454 | 2395.454 | gpu | 2.45 |

| chpc-gpu-12-1 | 64 | 1 | 32 | 2 | AMD EPYC 7532 32-Core Processor | 2395.454 | 2395.454 | gpu | 2.45 |

| chpc-highmem-10-0 | 48 | 1 | 24 | 2 | AMD EPYC 7352 24-Core Processor | 2295.496 | 2295.496 | highmem | 1.76 |

| chpc-highmem-10-1 | 48 | 1 | 24 | 2 | AMD EPYC 7352 24-Core Processor | 2295.496 | 2295.496 | highmem | 1.76 |

| chpc-highmem-12-0 | 48 | 1 | 24 | 2 | AMD EPYC 7352 24-Core Processor | 2295.496 | 2295.496 | highmem | 1.76 |

| chpc-highmem-12-1 | 48 | 1 | 24 | 2 | AMD EPYC 7352 24-Core Processor | 2295.496 | 2295.496 | highmem | 1.76 |

Node GPU Specs:

This table summarizes the GPU hardware specification for the GPU nodes:

| NodeName | Partition | Gres | GPU TFLOPS | GPU Deep Learning TFLOPS | GPU Memory (GB) | GPU CUDA Cores | GPU Tensor Cores | GPU Half Precision TFLOPS |

|---|---|---|---|---|---|---|---|---|

| chpc-gpu-10-0 | gpu | a100:1 | 19.5 | 156.0 | 40.0 | 6912.0 | 432.0 | 312.0 |

| chpc-gpu-10-1 | gpu | a100:1 | 19.5 | 156.0 | 40.0 | 6912.0 | 432.0 | 312.0 |

| chpc-gpu-10-2 | gpu | a100:1 | 19.5 | 156.0 | 40.0 | 6912.0 | 432.0 | 312.0 |

| chpc-gpu-12-0 | gpu | a100:1 | 19.5 | 156.0 | 40.0 | 6912.0 | 432.0 | 312.0 |

| chpc-gpu-12-1 | gpu | a100:1 | 19.5 | 156.0 | 40.0 | 6912.0 | 432.0 | 312.0 |

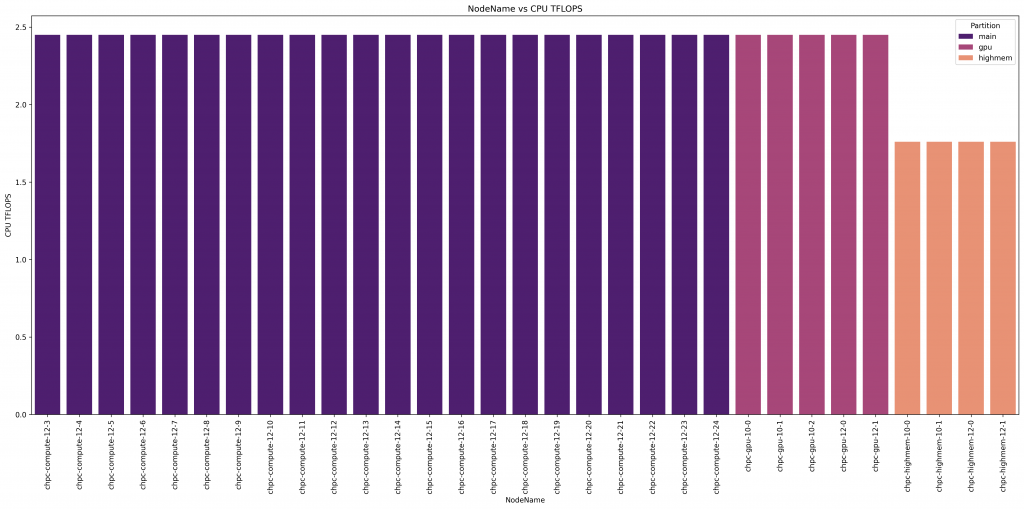

CHPC Summary Plots:

These visualizations present a summarized view of the compute resources within the CHPC cluster:

Node vs CPU TFLOPS:

This graphical representation offers insights into the theoretical CPU compute performance of individual nodes:

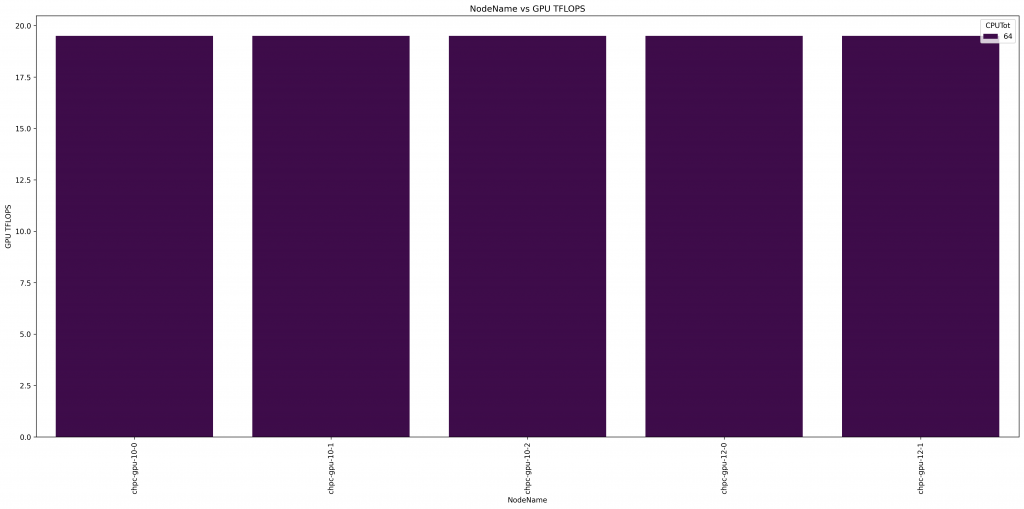

Node vs GPU:

This graphical representation offers insights into the theoretical GPU compute performance of individual nodes:

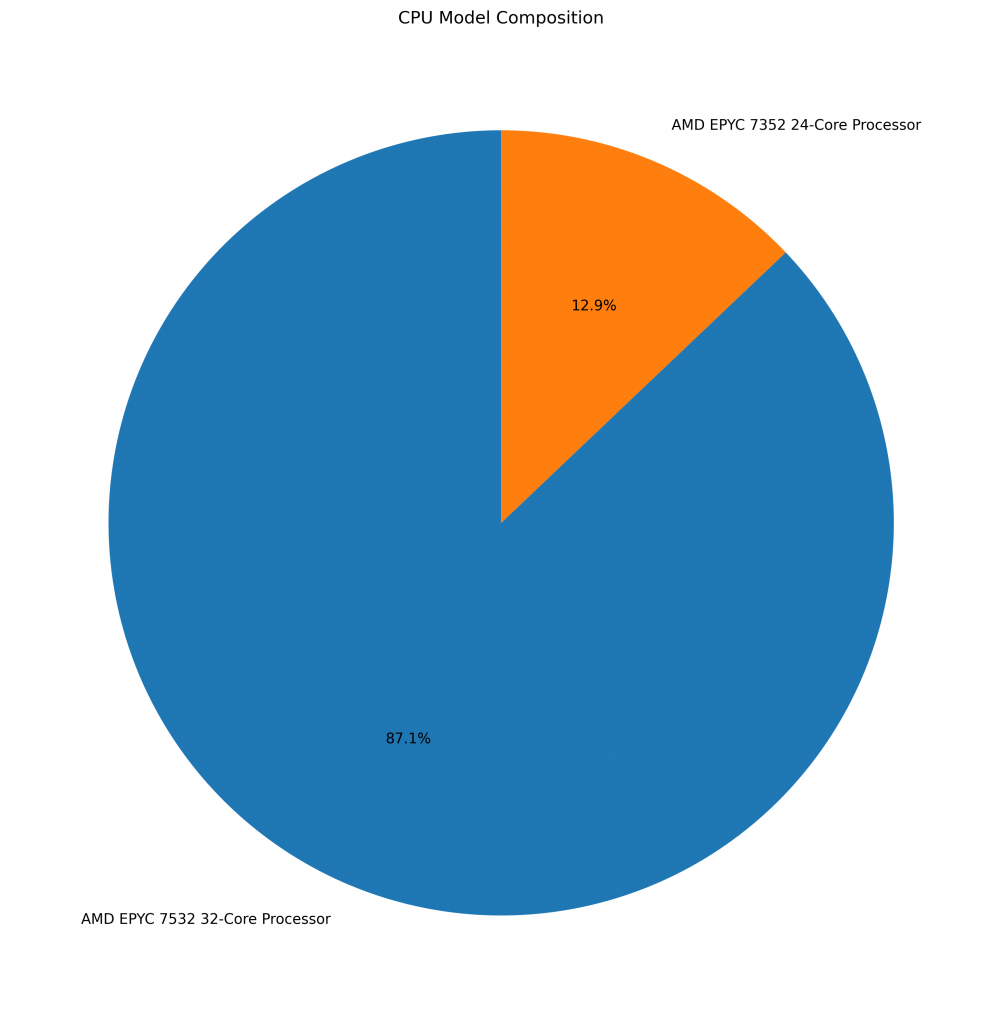

CPU Composition:

This graphical representation offers insights into the CPU compositions of individual nodes:

CHPC QOS Specifications:

QoS (rows) / Parition (columns) Limits on CHPC

| QoS/Partition | Main | Highmem | GPU |

|---|---|---|---|

| Main | 120 hours / job 1600 cores / group 1000 cores / job 1000 cores / user 1000 jobs / user |

X | X |

| Long | 336 hours / job 500 cores / group 300 cores / job 300 cores / user 300 jobs / user |

X | X |

| Debug | 15 mins / job 128 cores / group 32 cores / job 4 jobs / user |

15 minutes / job 128 cores / group 32 cores / job 4 jobs / user |

X |

| Highmem | X | 168 hours / job 192 cores / group 100 cores / user 100 jobs / user 8TB memory / group 6 TB memory / user |

X |

| GPU | X | X | 48 hours / job 320 cores / group 192 cores / job 5 GPUs/ group 3 GPUs / user 3 jobs / user |